3 signs you’re ready to A/B test – and 2 signs you’re not

A/B testing is powerful, but only if you have the traffic to support it. Here are the clear signs that your SaaS is ready to move from implementation to experimentation

How to know when testing will actually move the needle (and when it’ll just waste time)

Most teams start A/B testing too early – or for the wrong reasons. Done right, A/B testing isn’t guesswork – it’s how you turn data into real decisions.

They launch an experiment, hoping for quick wins but end up with flat results, inconclusive data, or 5 different opinions on what the numbers “really mean.”

After running hundreds of tests, I’ve found that success has less to do with tools or tactics – and more to do with timing.

There’s a point where testing starts to make sense – and another where it doesn’t.

Here’s how to tell which side you’re on.

3 signs you’re ready to A/B test

These are the markers I look for when deciding whether a client is ready to test – and where testing will actually move the needle.

1. You have a clear, measurable goal

If you can’t define what "better" means, you can’t test for it.

Testing only works when you’re optimizing a specific step in the funnel – like increasing demo requests, improving sign-up conversion, or reducing form drop-off.

Without a clear metric, you’re just changing design, not running an experiment.

Quick check: can you fill in this sentence?

"We’re testing this variation to increase [metric] because [reason]."

If not, you’re not ready to test — yet.

Testing belongs at the conversion stage — after awareness and consideration have already done their job.

Awareness → Consideration → Conversion (testing zone)

That’s where you can measure user action — not just attention

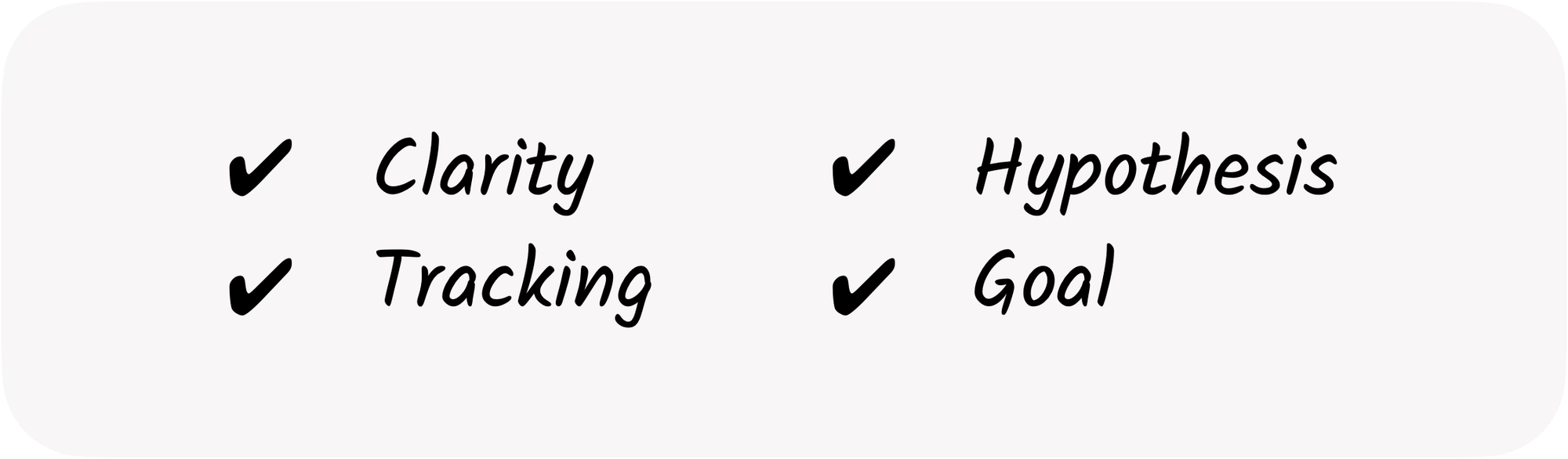

2. You’ve already fixed the obvious issues

A/B testing isn’t for finding broken links, slow pages, or unclear messaging.

It’s for optimizing what’s already good – to make it great.

If your value proposition, tracking, or UX is unclear, A/B testing won’t help – it’ll just confirm what’s broken.

Before testing, make sure you’ve already:

- Fixed technical and UX issues

- Clarified your message

- Set up conversion tracking

- Collected some qualitative feedback

Once the basics are solid, then you’re ready to test.

3. You have enough data to reach significance

You don’t need enterprise-level traffic – but you do need enough volume to reach statistical confidence and trust your result.

A/B testing is about learning from data, not guessing based on early trends. If your test doesn’t collect enough conversions to achieve significance, you’re looking at coincidence, not evidence. It’s the difference between learning something and just hoping you did.

The goal isn’t to test faster — it’s to test smarter. Wait until the data can speak clearly.

If your traffic volume is limited, focus on fewer, higher-impact tests rather than running multiple experiments at once. It’s better to get one confident answer than three inconclusive ones.

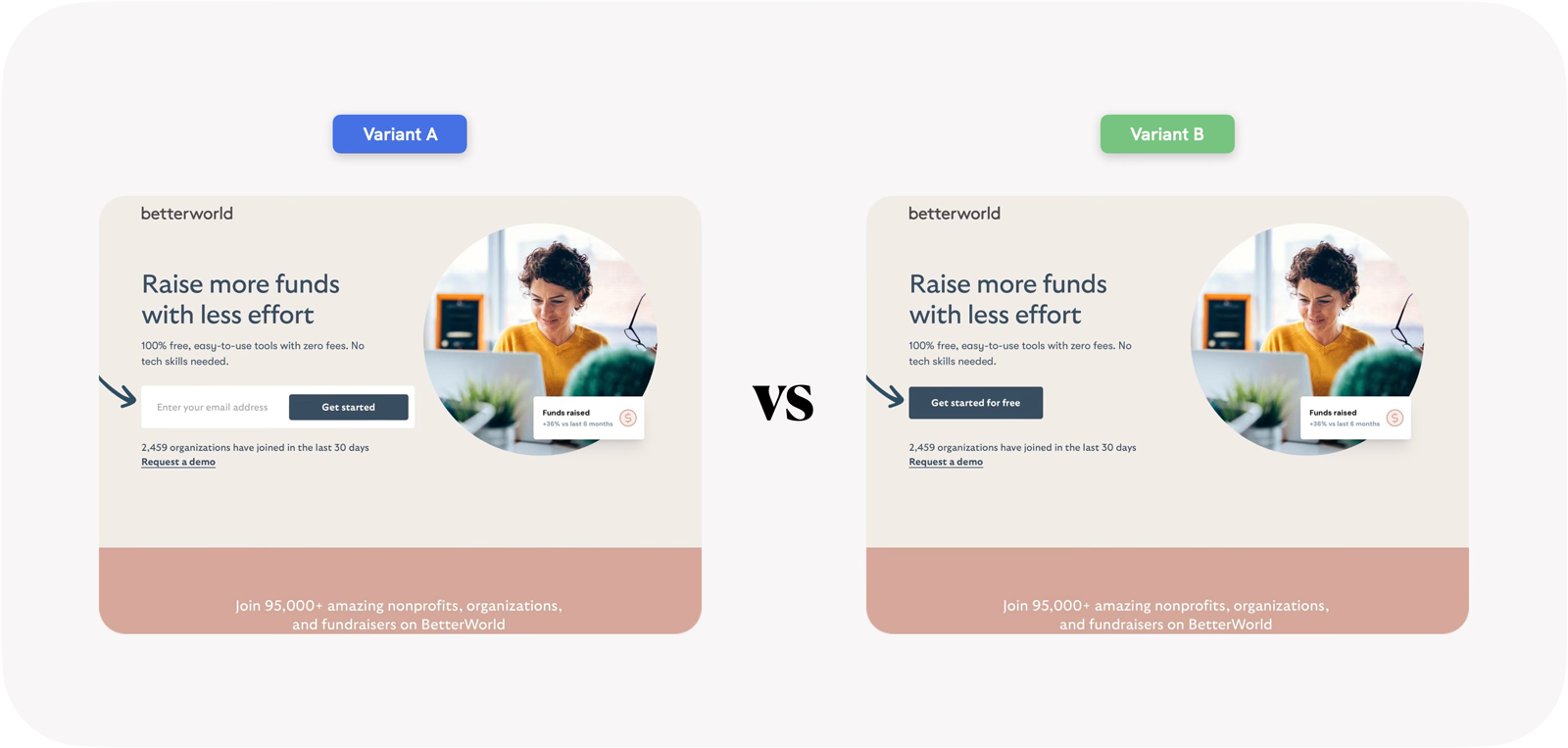

Example: Even small design or messaging changes can produce measurable lifts when tested correctly — like this Betterworld case study →

Variant B was the clear winner, achieving a 99.5% uplift in conversion rate and demonstrating statistical significance at 100% confidence.

2 signs you’re not ready yet

Not every page or campaign is ready for testing – and that’s okay. Before you start splitting traffic, make sure the foundations are solid.

Here are two common red flags that tell me it’s too early to A/B test.

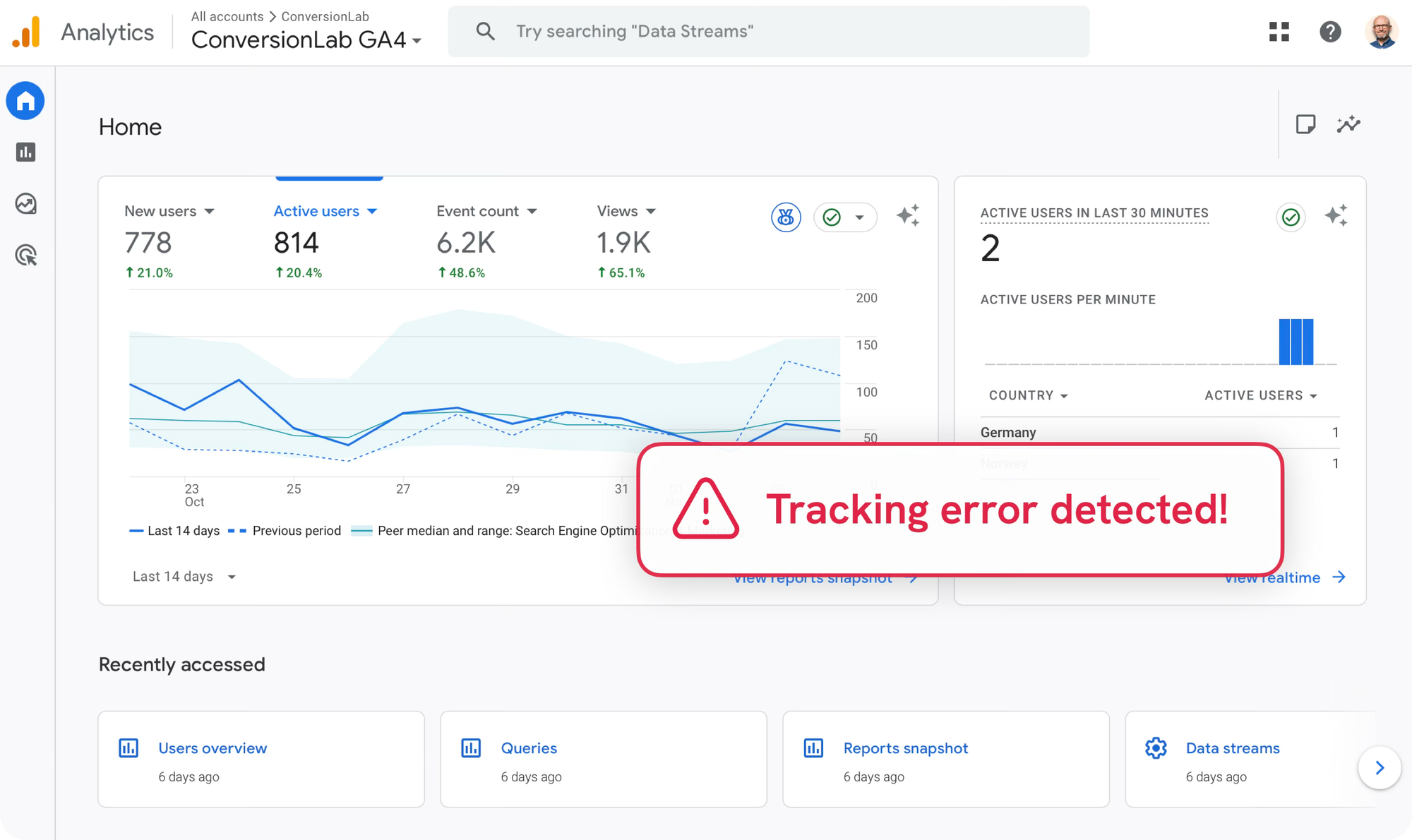

1. You don’t have reliable analytics

If your data is incomplete, inconsistent, or siloed, no test result can be trusted.

I’ve seen teams make big design or pricing decisions based on skewed data — only to learn later their tracking was off by 30%.

A/B testing without clean data is like measuring growth with a cracked ruler.

Before testing, double-check:

- Is conversion tracking firing on every device and variation?

- Is your analytics tool connected adequately to your A/B platform?

- Can you segment data by traffic source or campaign?

If any of those are a “maybe,” pause your test until it’s a “yes.”

2. You’re guessing, not hypothesizing

Testing random ideas (“let’s try a new button color”) isn’t experimentation – it’s decoration.

I once spoke with a SaaS company that proudly told me they had 130 ideas from 15 colleagues – and 12 tests running at once 😅.

That might sound like momentum, but it’s not. It’s noise – and almost impossible to learn from.

With that many tests in motion, you lose focus, data quality, and control over how experiments influence each other.

A/B testing isn’t about how many ideas you can run. It’s about testing the right ones, for the right reasons.

Every test should start with a hypothesis:

If we [make this change], then [this metric] will improve because [reason].

When you can fill in those blanks, you’re testing with purpose. When you can’t, you’re just guessing.

The takeaway

A/B testing isn’t where optimization begins – it’s where it starts to pay off.

If your foundation is shaky, testing won’t fix it.

But if your page is clear, tracked, and focused, testing can validate insights and unlock bigger gains.

If you’re unsure whether your page or funnel is ready, start smaller: clarify your message, fix the basics, and define what success means.

Then – and only then – start testing.

Great testing doesn’t replace insight – it proves it.

Want fresh eyes on your landing page?

I’ll review one of your pages and share quick, actionable feedback on how to improve clarity, relevance, and conversion rate — completely free.